In our paper A Brief History of Agile we looked at the evolution of the agile software development approach which briefly covered the ‘Waterfall Dead-end’. This post looks in more detail at the factors that made Waterfall appear attractive to a large number of competent people although most of these were not involved in developing major software programs. The people actually involved in managing major software developments were always largely in favor of iterative and incremental development approaches to software development which evolved into Agile some 20+ years later. The focus of this discussion is on the structured ‘Waterfall’ approach to developing software.

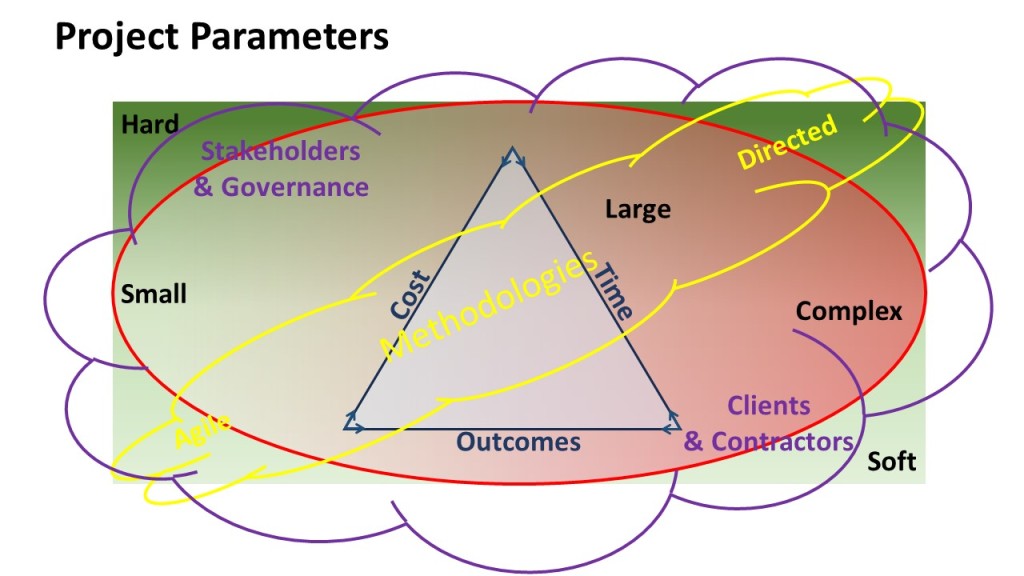

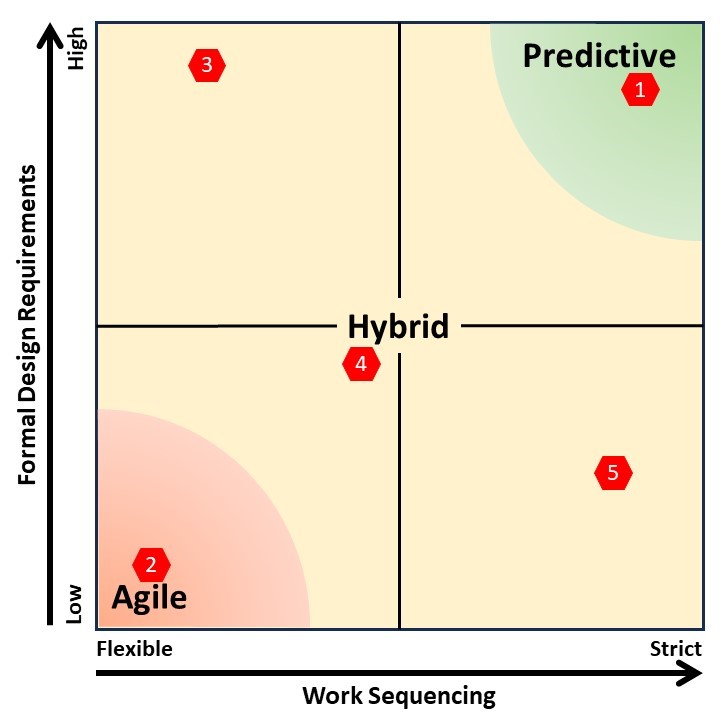

Just for clarification, Waterfall has never been used for other types of projects, and is not a synonym for plan-driven, predictive projects.

In most hard[1] projects, the design has to be significantly progressed before much work can be done, and the work has to be done in a defined sequence. For example, before concreting this house slab, the precise position of every plumbing fitting and wall has to be known (ie, the Architectural plans finalized), so the right pipe of the right size is positioned to be exactly under a fitting or centered within a wall line. The loadings on the slab also have to be known so the engineering design has correctly calculated the required thicknesses of slab, ground beams, and steel reinforcement. Once all of the necessary design has been done, the various trade works have to be completed in the right sequence and checked, before the slab can be poured. Once the concrete is set, any change is very expensive!

This is quite different to software projects, which are a class of soft project that is particularly amenable to incorporating change and being developed incrementally and iteratively to minimize test failures and rework. This iterative and incremental approach was being used by most major software development projects prior to the introduction of Waterfall in 1988, so what was it that made the Waterfall concept seem attractive? To understand this, we need to go back 30 years before the Agile Manifesto was published, to the USA Defense Department of the 1980s:

- The Cold war was in full swing and the fear of Russia’s perceived lead in technology was paramount. Sputnik 1 had been launched in 1957 and the USA still felt they were playing catch-up.

- Defense projects were becoming increasingly complex systems of systems and software was a major element in every defense program.

- Complexity theory was not generally understood, early developments were largely academic theories[2].

- CPM was dominant and appeared to offer control of workflows.

- EVM appeared to offer control of management performance.

- Disciplined cost controls were more than 100 years old.

The three dominant controls paradigms CPM, EVM, and Cost control appeared to offer certainty in most projects, but there seemed to be very little control over the development of software. This was largely true, none of these approaches offer much assistance in managing the creative processes needed to develop a new software program, and the concept of Agile was some 20+ years in the future.

In this environment, Waterfall appeared to offer the opportunity to bring control software projects by mimicking other hard engineering projects:

- The management paradigm most familiar to the decision makers was hard engineering – you need the design of an aircraft or missile to be close to 100% before cutting and riveting metal – computers were new big pieces of ‘metal’ why treat them differently?

- For the cost of 1 hour’s computer time, you could buy a couple of months of software engineering time – spending time to get the design right before running the computer nominally made cost-sense.

- The ability to work on-line was only just emerging and computer memory was very limited. Most input was batch loaded using punch cards or tape (paper or magnetic). Therefor concept of: design the code right, load-it-once, and expect success may not have seemed too unrealistic.

The problem was nothing actually worked. Iterative and incremental development means you are looking for errors in small sections of code and use the corrected, working code as the foundation for the next step. Waterfall failures were ‘big-bang’ with the problems hidden in 1000s of lines of code and often nested, one within another. Finding and fixing errors was a major challenge.

To the US DoD’s credit, they ditched the idea of Waterfall in remarkably quick time for a large department. Waterfall was formally required by the US DoD for a period of 6 years between 1988 and 1994, before and after iterative and incremental approaches were allowed.

The reasons why the name Waterfall still drags on is covered in two papers:

A Brief History of Agile

How should the different types of project management be described?

Conclusion

While Waterfall was an unmitigated failure, significantly increasing the time and cost needed to develop major software programs, the decision to implement Waterfall by the US DoD is understandable and reasonable in the circumstances. The current (1980s) software development methods were largely iterative and incremental, and were failing to meet expectations. The new idea of Waterfall offered a solution. it was developed by people with little direct experience of major software development (who were therefore not tarnished with the perceived failures). The advice of people with significant software development experience was ignored – they were already perceived to be failing. The result was 6 years of even worse performance before Waterfall was dropped as a mandated method. The mistake was not listening to the managers with direct experience of developing major software systems. But these same people were the ones managing the development of software that was taking much longer and costing far more than allowed in the budget.

The actual cause of the perceived failures (cost and time overruns) was unrealistic expectations caused by a lack of understanding of complexity leading to overly optimistic estimates. Everyone could see the problems with the current approach to software development and thought Waterfall was a ‘silver bullet’ to bring control to a difficult management challenge.

Unfortunately, the real issues were in a different area. Underestimating the difficulties involved in software development and a lack of appreciation of the challenges in managing complex projects. This issue is still not fully resolved, even today, the complexities of managing major software developments are underestimated most of the time.

For more on the history of Agile, see: https://mosaicprojects.com.au/PMKI-ZSY-010.php#Agile

[1] The definition of hard and soft projects can be found at: https://mosaicprojects.wordpress.com/2023/01/21/hard-v-soft-projects/

[2] For more on complexity see: https://mosaicprojects.com.au/PMKI-ORG-040.php#Overview